News!

Started PhD in Wireless Communications with focus on Millimeter Wave for V2I

Graduated with Masters Degree in Computer Science from UNL (Lincoln, NE, May 2025)

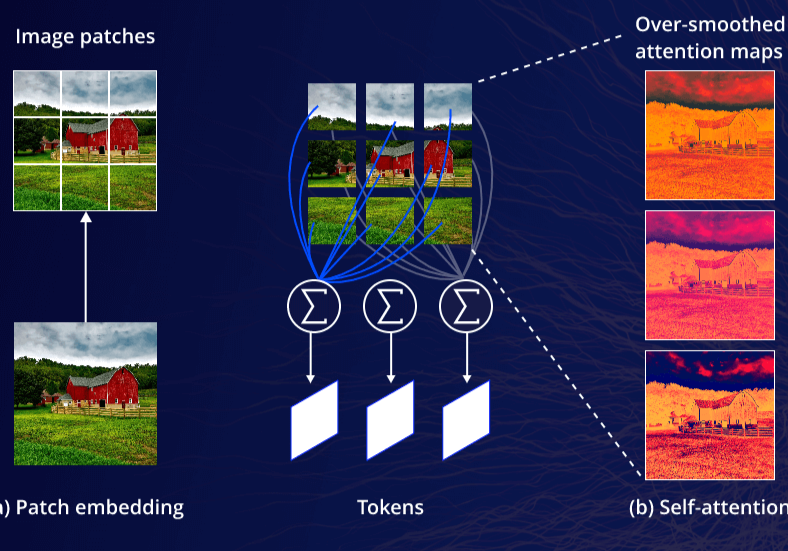

Presented "Perception-based Runtime Monitoring and Verification for Human-Robot Construction Systems" at ACM/IEEE MEMOCODE 2024 (Best Ppaer Candidate) (Raleigh , NC, Oct 2024)

DAC 2024 Workshop Poster Presentation - PerM: Tool for Perception-based Runtime Monitoring for Human-Construction Robot Systems (San Francisco, June 2024)

Graduate Teaching Fellowship Program at UNL (Lincoln, April 2024)

IROS 2023 Formal Methods Workshop Poster Presentation- Vision-based Runtime Monitoring for Human-Construction Robot Systems (Detroit, Oct 2023)

Successfully passed PhD qualifying examination (Lincoln, May 2023)

Presented research at Graduate Student Symposium (Lincoln, March 2023)